ChatGPT pioneer Sam Altman, others warn AI can wipe out humanity, if left loose

May 30, 2023

A number of artificial intelligence (AI) experts, academicians and notable people including OpenAI CEO and Google DeepMind Tuesday warned of humanity’s extinction if the threats posed by AI to humans were not dealt with as a top global priority.

In a brief statement published on the webpage of the Centre for AI Safety Tuesday, it read: "Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war."

The statement was also backed by Sam Altman, CEO of ChatGPT-maker OpenAI, Demis Hassabis, CEO of Google DeepMind and Dario Amodei of Anthropic.

Similar kinds of warnings were also issued in an open letter that surfaced in March which urged to pause AI development citing profound risks to human civilisation. There were numerous signatories including Tesla and SpaceX CEO Elon Musk.

The godfather of AI Geoffrey Hinton, who had been also voicing concerns and quit Google to speak freely about the dangers of AI has also supported the idea.

The statement also has the signature of Yoshua Bengio, professor of computer science at the University of Montreal.

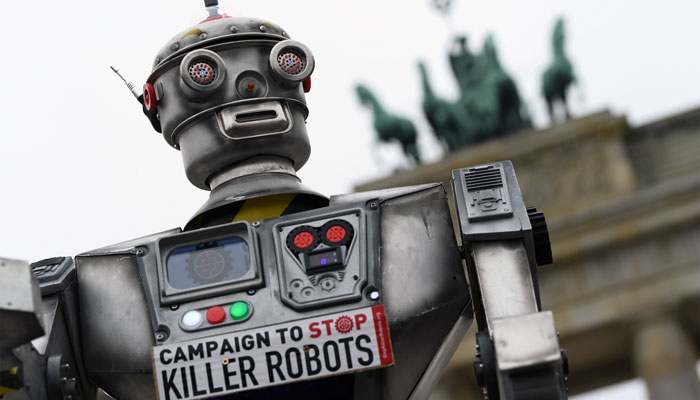

The statement highlighted wide-ranging concerns about the dangers of AI if it is to remain unchecked.

According to AI experts, "society is still a long way from developing the kind of artificial general intelligence (AGI) that is the stuff of science fiction; today’s cutting-edge chatbots largely reproduce patterns based on training data they’ve been fed and do not think for themselves.”

As the race to incorporate AI technology intensified among tech giants with the behemoths pouring billions of dollars into their efforts, the calls to bridle the AI have increased lest any mishap occur.

The statement came after the remarkable success of OpenAI’s ChatGPT — a chatbot which is able to generate human-like responses.

After the arms race, a number of experts, legislators and advocacies have been voicing concerns about misinformation, deep fakes and job replacements.

Geoffrey Hinton — a pioneer of AI neural network systems — had earlier told CNN that he decided to quit Google and “blow the whistle” on the technology after "suddenly" realizing “that these things are getting smarter than us."

In a tweet, Dan Hendrycks, director of the Center for AI Safety, wrote that the statement first proposed by David Kreuger, an AI professor at the University of Cambridge, does not preclude society from addressing other types of AI risk, such as algorithmic bias or misinformation.

The director compared these warnings with the atomic scientists "issuing warnings about the very technologies they’ve created."

"Societies can manage multiple risks at once; it’s not ‘either/or’ but ‘yes/and,’" Hendrycks tweeted.

"From a risk management perspective, just as it would be reckless to exclusively prioritize present harms, it would also be reckless to ignore them as well."