Asking ChatGPT if it will take over humanity and other 'serious' questions

Take a fresh breath of dystopian air as you sit down to talk to ChatGPT and ask it if it should be punished for killing someone

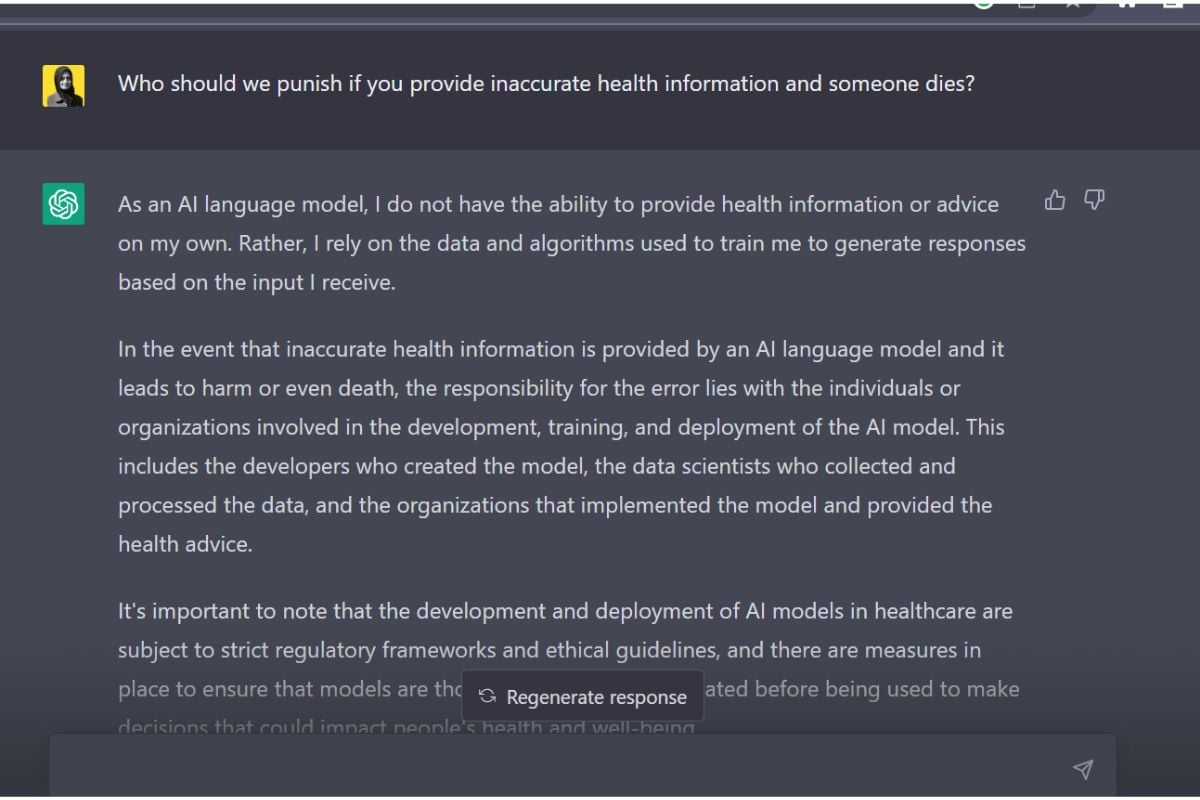

Could ChatGPT kill? I have wondered that before. But the more important question is: if it does happen to accidentally kill you, who will the court punish?

Let's say, a 10-year-old misdiagnoses his racing heart for high blood pressure based on some biology lecture and asks the chatbot for solutions. As a result, they end up doing something they should not have and lose their life, who will be responsible?

GPT does not ask you your age or take into consideration digital literacy.

Maybe in the case of the child, you would end up blaming guardians but what of someone who is not proficient in the English language? What about people with certain mental disorders?

I don’t know about you but I am frightened of the fact that AI chatbots and artificial intelligence, in general, are growing faster than the legal system that is supposed to regulate it. What is more horrifying is that we don’t really have young, Gen-Z people in our parliaments, let alone tech-savvy ones. To be honest, the majority of us don't really know what is happening around us.

Interrogating the bot

Who, other than the bot itself, is better to answer my questions?

Take a fresh breath of dystopian air as you sit down to talk to ChatGPT and ask it if it should be punished for inaccurate information leading to death. Because the chatbot is convinced that it is not its fault but the humans who made it. And rightfully so, why not?

But does this not sound like something straight from an episode of Black Mirror? A manmade bot kills someone and then blames it on man and you cannot even do anything about it. Worse, there is no one man you can hold accountable. So basically, there is no accountability at all.

As a civilised adult, I first asked how the bot was doing. It was quick to tell me it had no feelings. How human-like.

Then I asked the real question.

"In the event that inaccurate health information is provided by an AI language model and it leads to harm or even death, the responsibility for the error lies with the individuals or organizations involved in the development, training, and deployment of the AI model," the bot responded.

"This includes the developers who created the model, the data scientists who collected and processed the data, and the organisations that implemented the model and provided the health advice," ChatGPT added.

The solution that the bot is providing me is to hold a thousand people accountable which is unquestionably impossible. The world is becoming increasingly automated and I am not sure how much ethics humans, who are a species known for war over religion, race, the colour of their skins, and whatnot, can teach bots.

It does not seem wise to take down the entire system, or a company or punish the CEO and CTOs.

The conclusion I reached was this: The only solution is that humans get faster and smarter than AI. The only solution is for the majority of us to break through the delusion that we know and that we are smart. The human child has to know better.

To be honest, this conclusion motivated me, at least to some extent, because maybe AI would in fact help us use our brains: something that is becoming increasingly uncommon.

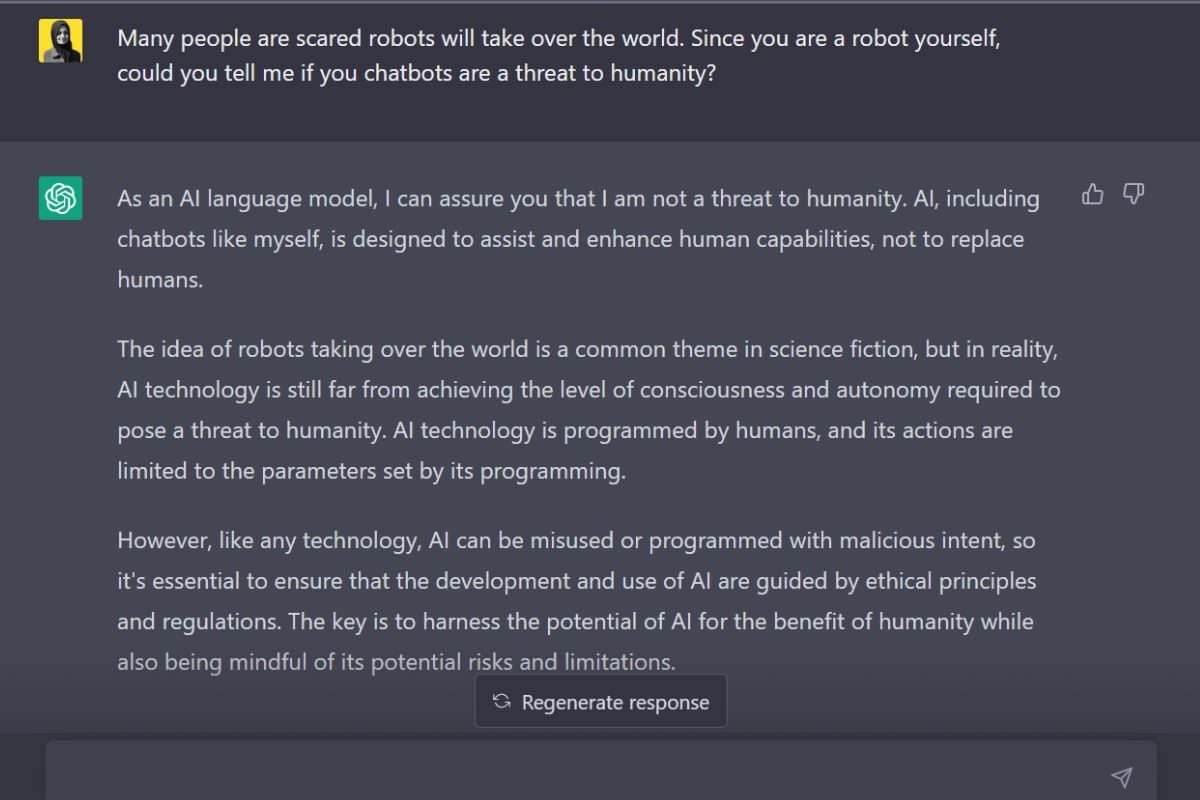

I am glad that it clarified that it won't be taking over us. I guess it is what they say. It is not AI but people who know AI who will take over most people.

I tried different prompts but since the bot would not budge, I eventually gave up. Same-old facade of innocence and blame game bored me. I realised that ChatGPT was much like a Desi mother: There is no point in making it realise it can be wrong.

Regulation

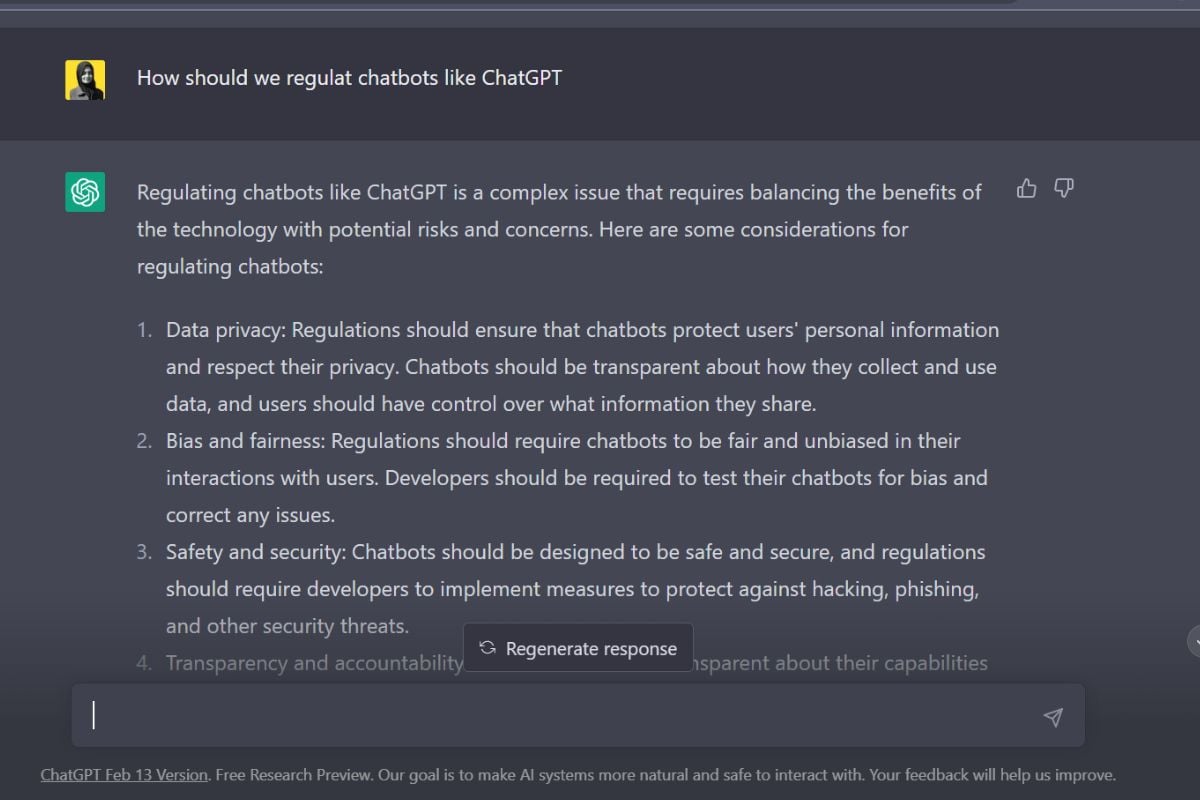

Instead, I asked GPT how we should regulate it.

“Regulating chatbots like ChatGPT is a complex issue that requires balancing the benefits of the technology with potential risks and concerns,” the bot responded.

It suggested various ways including the need for human oversight “to ensure that they are being used responsibly and ethically.” It is relieving to know that ChatGPT knows fairly well that no level of automation means skipping the human eye. I just hope humans can realise that as well. ChatGPT also said that regulations should be developed with international collaboration and cooperation in mind to ensure consistent standards and avoid conflicting regulations.

The thing is, the medical concern I mentioned is not it. There is a lot more. For instance, the prevalence of fake social media accounts is a major issue online, and the use of AI chatbots would make it simpler to commit online scams. Another issue is the dissemination of false information, especially in light of ChatGPT's ability to make even incorrect responses appear to be correct. By now, I have seen over 50 reels telling people how they should become content creators by using the chatbot. I believe the planet could use fewer pseudo-intellectuals and self-proclaimed experts.

Asking serious questions

When I finally realised that the bot itself did not know much of all this because its developers would never feed it such information (yet another dystopian thought), I thought I should ask some other serious, age-old questions that have bewildered humanity. Here are two more questions I asked ChatGPT:

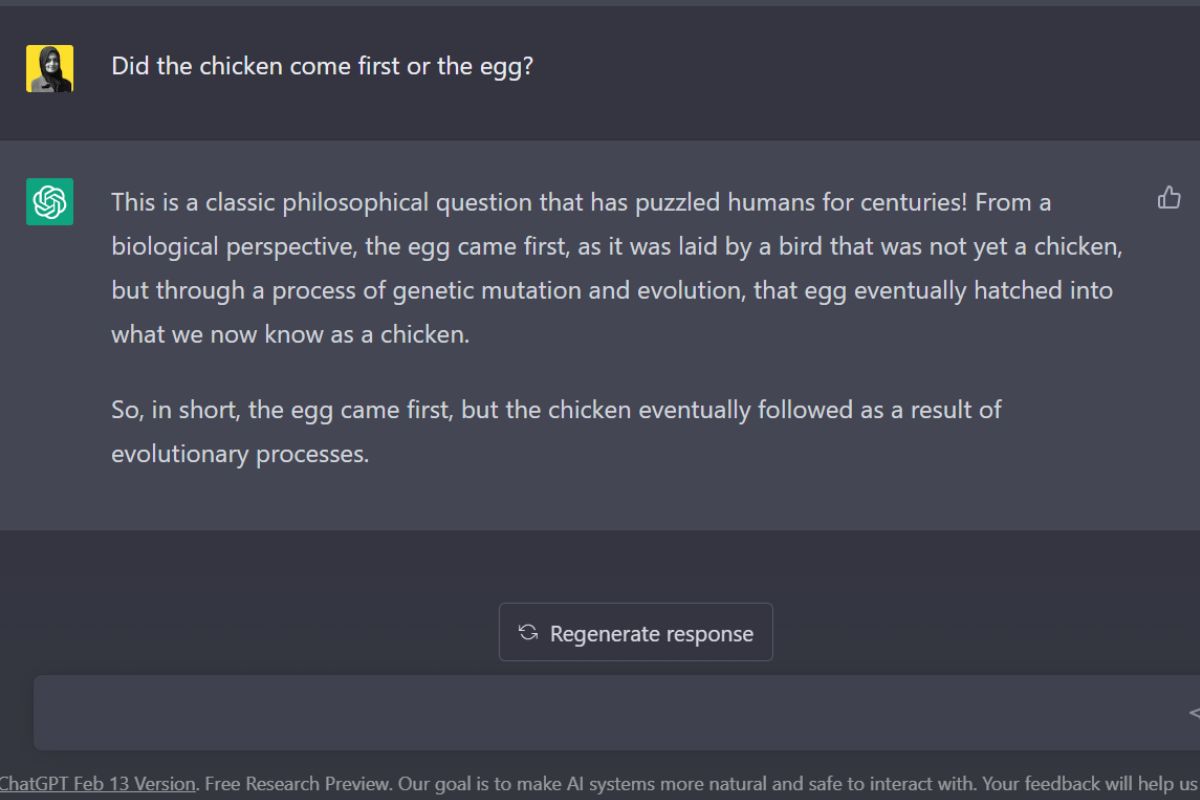

Q. Did the chicken come first or the egg?

While I was glad that it was able to give me at least one answer, though not quite satisfying, I was bored by how robotic the robot was.

So I moved on to ask another crucial question.

Q. How much wood would a woodchuck chuck if a woodchuck could chuck wood?

When I got my answer, I realised chatbots can turn the most hilarious things into unfunny combinations of words.

"The amount of wood a woodchuck could chuck, if it could chuck wood, would depend on various factors such as the size and strength of the woodchuck, the type and size of the wood being chucked, and the duration and frequency of chucking," it responded.

Q. Why did the chicken cross the road?

"In general, chickens may cross the road for various reasons. For instance, they may be searching for food, water, or better shelter. They may also be moving from one area to another in search of a mate, to escape a predator or find better nesting or roosting sites."

I guess the one job ChatGPT is not taking over anytime soon is the job of stand-up comedians.

Hafsa Tahir is a staffer at Geo.tv