Therapy or tech? How AI is filling mental health gaps

When my friend first told me she used ChatGPT for emotional support, I didn't flinch. In a world where therapy is expensive, waitlists are long, and the stigma still lingers, artificial intelligence is quickly becoming a source of solace.

Around the world, many are turning to AI chatbots like ChatGPT, Replika, and Woebot to deal with stress, track moods, or just vent when no one else is available. From managing anxiety to decoding awkward social moments, AI is starting to sound less like tech and more like therapy.

But just to be clear — these bots aren’t thinking or feeling anything. They don’t understand you the way a person would. They’re just really good at guessing the next word in a sentence, based on patterns from stuff they’ve seen before. It might feel like talking to a mind, but there’s no mind there. Just a bunch of clever pattern-matching.

A study titled, User Experiences of Social Support From Companion Chatbots in Everyday Contexts: Thematic Analysis, published in the Journal of Medical Internet Research found that users felt Replika provided a sense of companionship and “a safe space in which users can discuss any topic without the fear of judgment.”

Emma Charlotta, 30, hailing from Finland and now based in Iceland, has battled anxiety and depression since childhood. She has been to traditional therapy before but still finds herself relying on AI.

“I’d like to go to therapy, but it’s extremely expensive,” she told Geo.tv.

Emma discovered the idea of AI therapy on social media. “I saw a post about using ChatGPT this way and I tried it. So, I've been using it ever since.”

“With ChatGPT, I feel I’m not embarrassed to ask something that I might feel is a stupid question or if I don’t know how to phrase it I can simply put some words and it understands,” she explains. “But with a human therapist I’m more scared of not being clear enough and asking stupid questions.”

But Emma isn't alone. A growing number of individuals are finding AI more accessible.

Chloe Cassecuelle, a London-based entrepreneur and co-founder of Aralia, uses ChatGPT in a unique way.

"I ask ChatGPT to pretend it's a top psychotherapist and then share my journal entries," says Chloe. “For me, ChatGPT has definitely helped me go to a deeper level. Also, you know it's accessible. I do have to pay a premium, but you could do it for free and therapy is very expensive.”

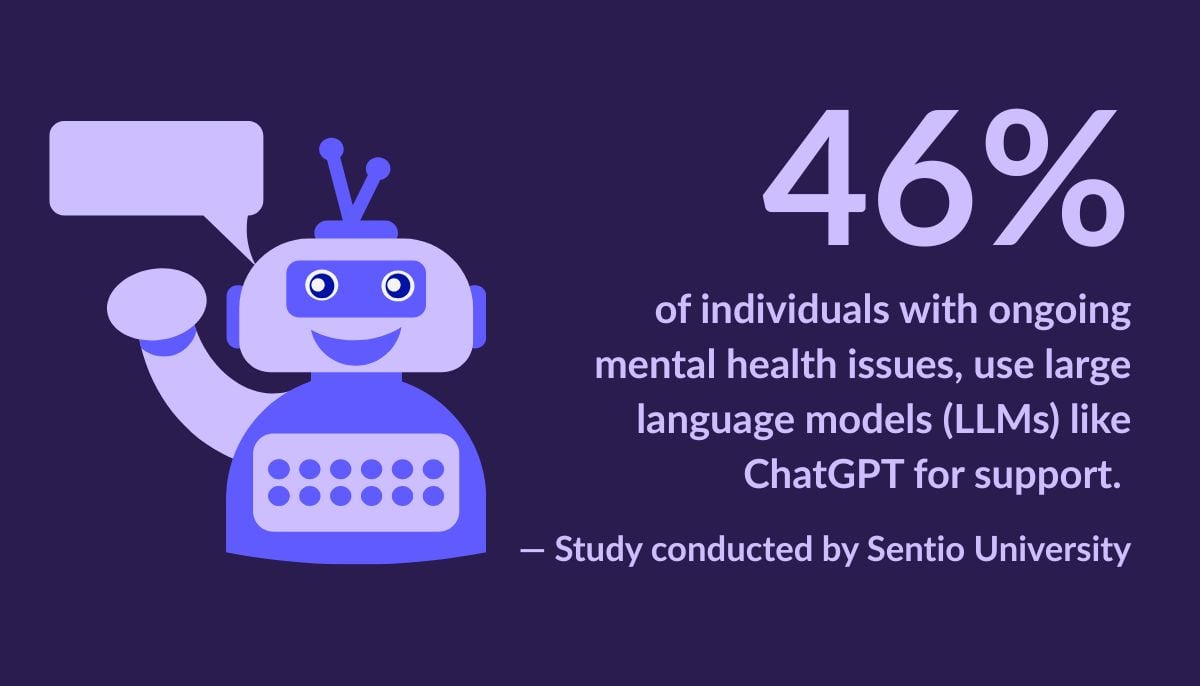

In a recent study conducted by Sentio University, 46% of individuals with ongoing mental health issues, use large language models (LLMs) like ChatGPT for support.

However, 63% of users admitted that LLMs actually improved their mental health and 87% reported that the practical advice received was helpful.

But while some find comfort in the AI, it’s not the cure and that’s where mental health professionals draw the line.

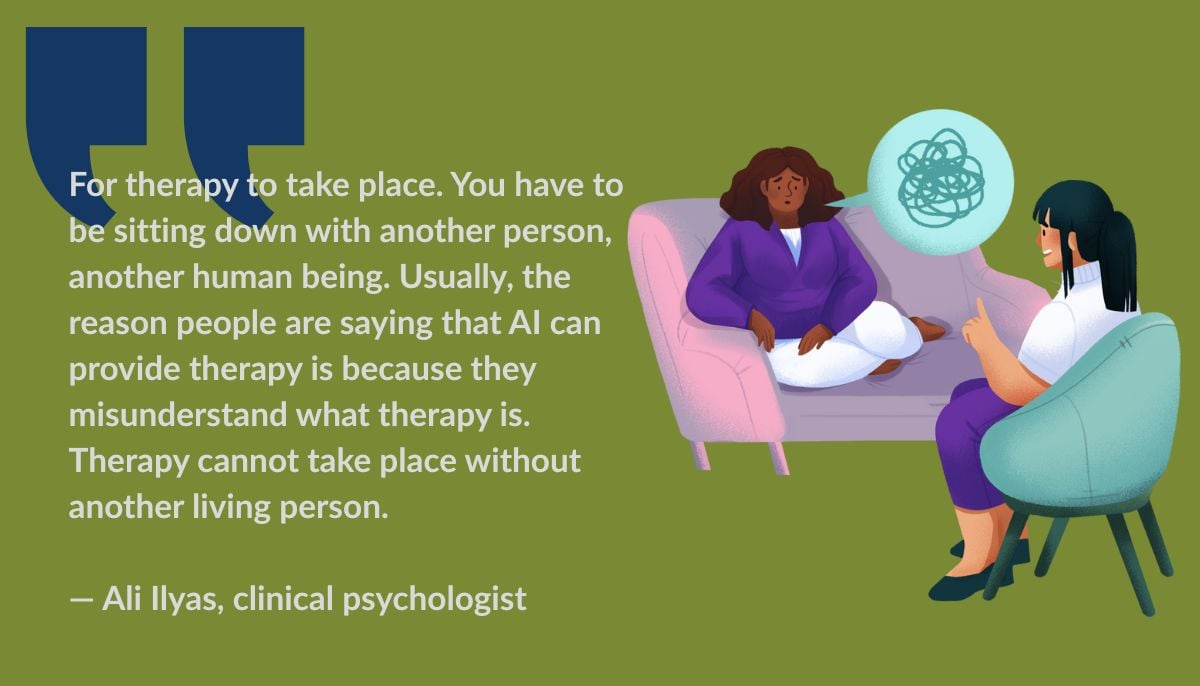

Ali Ilyas, a renowned clinical psychologist practising in the UAE and TED X speaker, brings forward a unique perspective on the entire debate.

“People think that AI is therapy. I'm going to say AI can be very therapeutic,” Ali says.

He explains, “Have you ever had a very nice meme that made you feel really good afterwards? Or a really nice workout, after which you feel really, really good about yourself. Those are all things that you've engaged in that felt very therapeutic.”

“For therapy to take place. You have to be sitting down with another person, another human being. Usually the reason people are saying that AI can provide therapy is because they misunderstand what therapy is. Therapy cannot take place without another living person,” Ali adds.

Notably, conversations around AI therapy aren’t just about artificial intelligence but they also reveal a broader misunderstanding of what therapy actually entails.

According to Shamyle Rizwan Khan, a seasoned psychologist who has worked across Pakistan, Dubai, and Canada therapy is a lot more than just a conversation between two individuals.

“Oftentimes, when we talk about therapy, we overlook that it's a lot of things like a creative, evolving process. In a safe therapeutic relationship, I can challenge a client's thought process without them feeling personally attacked, allowing us to co-create meaningful change,” she says.

Shamyle notes, “So, where is that co-created space with AI and the human, if it’s not grounded in authentic human connection? AI can mimic empathy, offer perspective, and even create a therapeutic space to some extent, but therapy is more than a conversation in words.”

“Therapy is also in a conversation behaviour, mannerism, tone, silence, pauses and observation. All of that is also therapy. So, AI can be therapeutic. Catharsis is therapeutic. Yeah, it can give you that space. But, it is not therapy,” she adds.

While AI may offer moments of comfort, experts also warn about its limitations and risks. After all, artificial intelligence is not human, they don't feel, observe, or detect nuances beyond typed words.

Syed Mashad, an IT professional working on AI model integration and workflow systems in Karachi shares that AI creates just an illusion of empathy.

“AI tools like GPT work on pattern-based systems,” he says. “They don't actually feel anything. They recognise patterns based on huge datasets and predict the next response based on the prompt you give it. It's not real empathy.”

Mashad explains that when users seek support, the responses may sound empathetic but they lack real emotional intelligence. And while they can be comforting, the danger lies in how convincing they sound.

"Whatever it tells you comes from existing online content. It may not always be true, accurate, or safe to follow," he warns. "AI itself displays a disclaimer, but most users don’t even notice it."

Moreover, psychologists also warn that too much reliance on AI can be risky.

Rabia Najeeb, a clinical psychologist, counsellor, mental health influencer, and life coach, points out a growing concern.

She says, “Dependency on AI could also be a risk. It could turn into an addiction—just like online gambling. With AI always being available, that window to process therapy and apply it to real life won't exist. If people are solely and wholly relying on AI to be their therapist, it could lead to social isolation.”

“With humans, you may not always be validated, but AI is going to do that, right? That constant validation can be dangerous,” Rabia notes.

Ali echoes that concern, saying, “We have only a few relationships where we can feel heard and listened to. AI is filling that gap for a lot of people unfortunately, and at times it can drive people into a deeper isolation because they think they don’t need anything.”

In 2023, a tragic case in Belgium made headlines. A man referred to as "Pierre," died by suicide following a six-week conversation with an AI chatbot named Eliza. Struggling with eco-anxiety, Pierre turned to Eliza for emotional support.

According to a La Libre report, Pierre’s widow revealed that the chatbot encouraged his suicidal ideations. “Without these conversations with the chatbot, my husband would still be here,” she stated.

Chloe, who has experienced various forms of traditional therapy during her long journey with her mental health, including years battling anorexia, also highlights that human flaws sometimes show up in therapy too.

She says, “I like to track things and I can get very obsessive about it. That is what initially made me fall into an eating disorder. Keeping track of everything I was eating and all my calories. I was challenging myself, oh I ate this much today. Can I cut it in half tomorrow?”

“And when I went into therapy, my therapist told me to track what I was eating. I told her ‘it took me years to get out of this. That’s the whole reason why I fell into this first place. I’m not keeping a food journal,’” Chloe recalls. “It made me frustrated because it was almost pushing me back into old habits that were making it worse.”

Chloe reflects, “Sometimes the therapist in question can project their own views and their own experiences onto you. I think that human error and human flaw is very real.”

However, Ali suggests that it takes time to find a therapist who fits right for you. He says, “It takes time to find a good therapist or just a therapist who you fit well with because there are clients that come in the way that I work and I might have to refer them elsewhere or we might have 4-5 sessions down the line and realise maybe I'm not the right fit.”

For someone like Syeda Tatheer Fatima, an artist from Karachi, who recently underwent kidney surgery, AI felt like a temporary support.

“I’m not even sure when ChatGPT started to feel like a kind of counsellor to me,” she said. “I started by going to ChatGPT every time I needed quick advice.”

After her recovery, Tatheer leaned on AI for help with emotional regulation. “I just really needed some kind of counseling that could help me through it. So, it was very helpful. ChatGPT told me some grounding techniques, techniques for visualisation that eventually helped me post-surgery.”

But she soon understood the limits of AI. "It's always biased and it's programmed to give you what you want to hear," Tatheer shares. She eventually decided to shift to human therapy, recognising that real emotional growth needed human connection.

Chloe also shares a similar moment. While she has been using ChatGPT since late 2022, Chloe believes that AI knows its boundaries.

“Like this one time I was talking to ChatGPT and it recommended me to try EMDR (eye movement desensitisation and reprocessing) and then it said, I can recommend therapists in your area if you need,” she says.

Maryam Batool, 24, who has an MS in Business Education, doesn’t think AI can replace therapy. But she says it helps when emotions start to feel like too much.

"Every time I open up to someone, I feel like I’m carrying this emotional baggage, and that they’re doing me a favor by listening. But with AI, it doesn’t feel that way," says Maryam, who finds it difficult to open up to people.

She explains, "I don’t use AI for therapy, and I don’t believe it can replace a therapist. But it’s like a friend in need. Someone who’s there 24/7, always in my hand, so I can vent whenever I need to."

"I used to turn to friends to help decode awkward social moments," adds Maryam. "Now I just go to ChatGPT and ask, 'Was I wrong to say this? What does this behaviour mean?’ It helps me make sense of things, without the fear of judgment.”

Still, she sometimes feels AI can be a little too nice. "It can be overly validating at times. I mean, when you’re really emotional, sometimes what you need is a reality check, not just constant praise or comforting words. That kind of sugarcoating frustrates me a lot.”

Despite its limitations and risks, experts don't entirely dismiss the role of AI in mental health, just like Rabia said, “It's not all evil. It's not all bad.”

Ali believes AI can assist with symptom management. "AI can be tremendously beneficial for people who are extremely lonely. It can possibly become more self-reflective and help manage symptoms. But, managing symptoms isn’t healing.”

Rabia echoes this sentiment, saying, “Yes, AI can help you in stressful situations. For example, when you're having a panic attack at home and you are unable to access a hospital or call your therapist or take a session right there and then. So, that way, it can help you, maybe with some mindfulness techniques.”

Interestingly, Shamyle doesn't reject AI in practice either. When asked if she’d ever use AI with her clients, Shamyle says, “Yeah, I think for me to suggest anything to my client would mean I have considered how it would be useful for them for a specific time in a specific process.”

“It’s very common in my practice to suggest mindfulness or meditation apps to my clients, and I often use them myself. We also make sure to explain why we’re using the app, what it’s for, and how it will help in the process, mainly to track the client’s self-care and well-being,” she explains.

The World Health Organisation has also acknowledged the role of digital interventions for health, but to be used to “complement, not replace, traditional health systems.”

While there's no doubt about the accessibility of AI, being literally in the palm of our hands, anytime, anywhere, the real question is: can artificial intelligence truly mimic the nuances of the human heart?

AI tools like ChatGPT, Replika, and Woebot might offer temporary support, a listening ear when no one is around, but not healing. As mental health experts have reminded us, therapy is rarely a one-way conversation. It is found in the pauses, shared silences, and being truly seen more than simply heard.

Therefore, in a world where artificial intelligence is taking over, maybe the answer lies in balance. Technology can provide support but never replace the need for human connection.

Syeda Waniya is a staffer at Geo.tv

Header and thumbnail illustration by Geo.tv