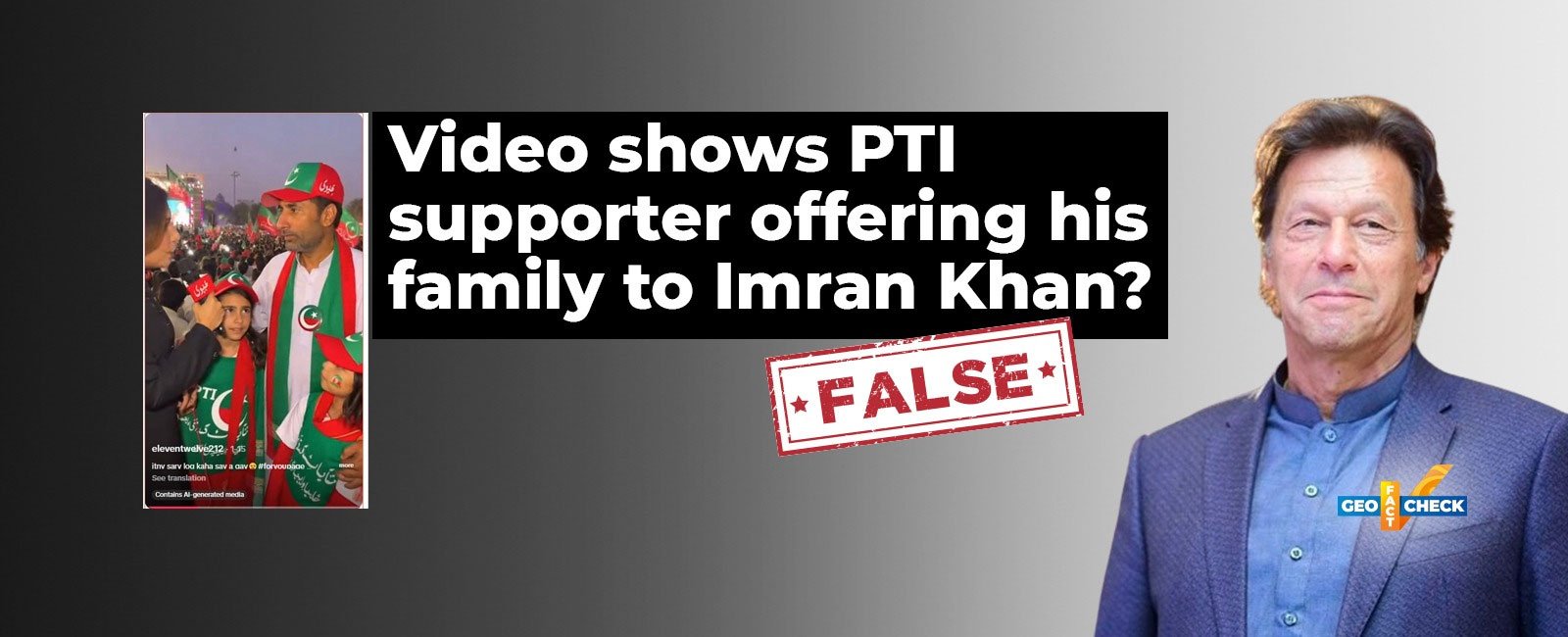

Fact-check: No, PTI supporter did not offer his wife and daughters to Imran Khan. The video is fake

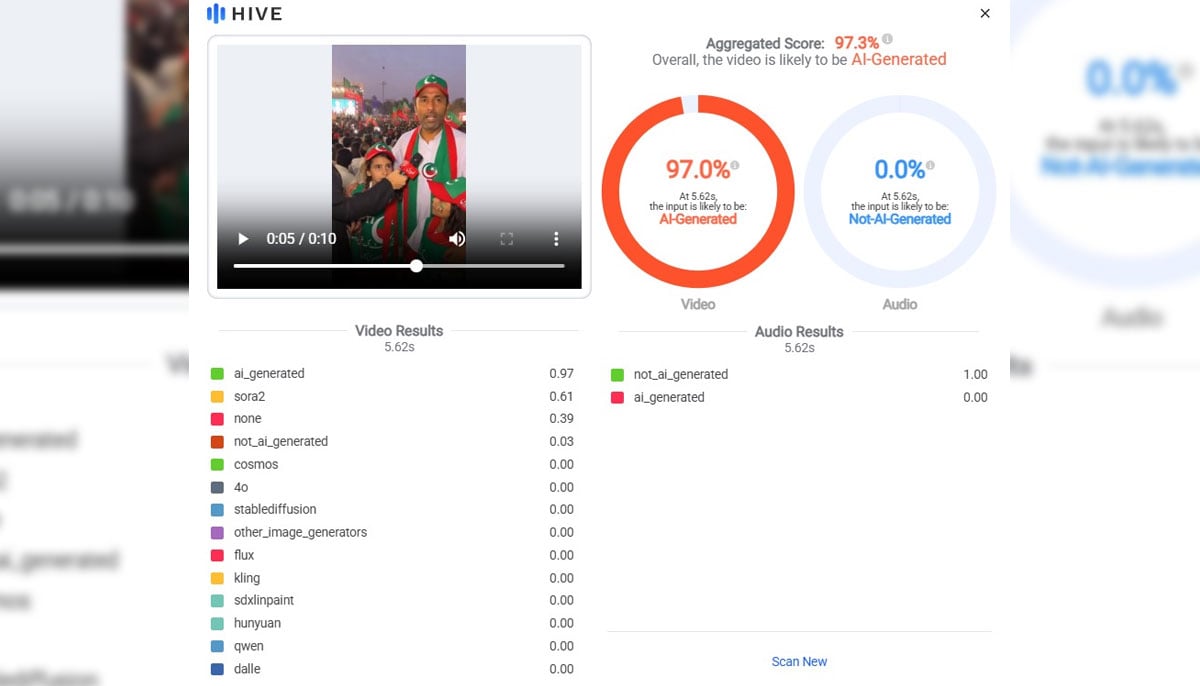

Hive Moderation, an AI content detection platform, assigned the video an aggregated score of 97.3%, suggesting a high likelihood of AI-generated or deepfake content

A video circulating on social media allegedly shows a supporter of the opposition party, the Pakistan Tehreek-e-Insaf (PTI), telling a reporter that his devotion to former prime minister Imran Khan is so extreme that he would be willing to sacrifice his wife and daughters for him.

The clip has triggered widespread discussion online, with several users accusing the party of fostering a cult-like following.

The claim is false. The interview is fabricated and was created using publicly available artificial intelligence (AI) tools.

Claim

On January 21, a user on X (formerly Twitter) shared a 13-second video purportedly showing a father standing with his two young daughters at a PTI rally. The video was captioned: "This is the last boundary of conscience, after which conscience itself questions a person: Tell me, how far are you willing to compromise your self-respect?"

In the footage, a reporter asks the man about the extent of his loyalty to former prime minister Imran Khan.

Reporter: "If Imran Khan asks you for your two daughters, will you give them to him?"

Man: "If, apart from these two, Imran Khan asks me for my wife, I would give her up as well."

At the time of writing, the video had been viewed over 21,000 times, received nearly 600 likes, and was reposted 416 times.

Similar claims were also shared on Facebook, Instagram and Threads here, here and here.

Fact

Independent verification and multiple AI detection tools have confirmed that the video is fake.

Geo Fact Check reviewed the clip and found several indicators of AI manipulation. Notably, the Urdu text visible on the reporter’s microphone and the children's clothing appear distorted and nonsensical.

Additionally, the same logo seen on the microphone also appears on the interviewee's cap, an inconsistency commonly associated with AI-generated visuals.

These elements confirm that the video is not authentic.

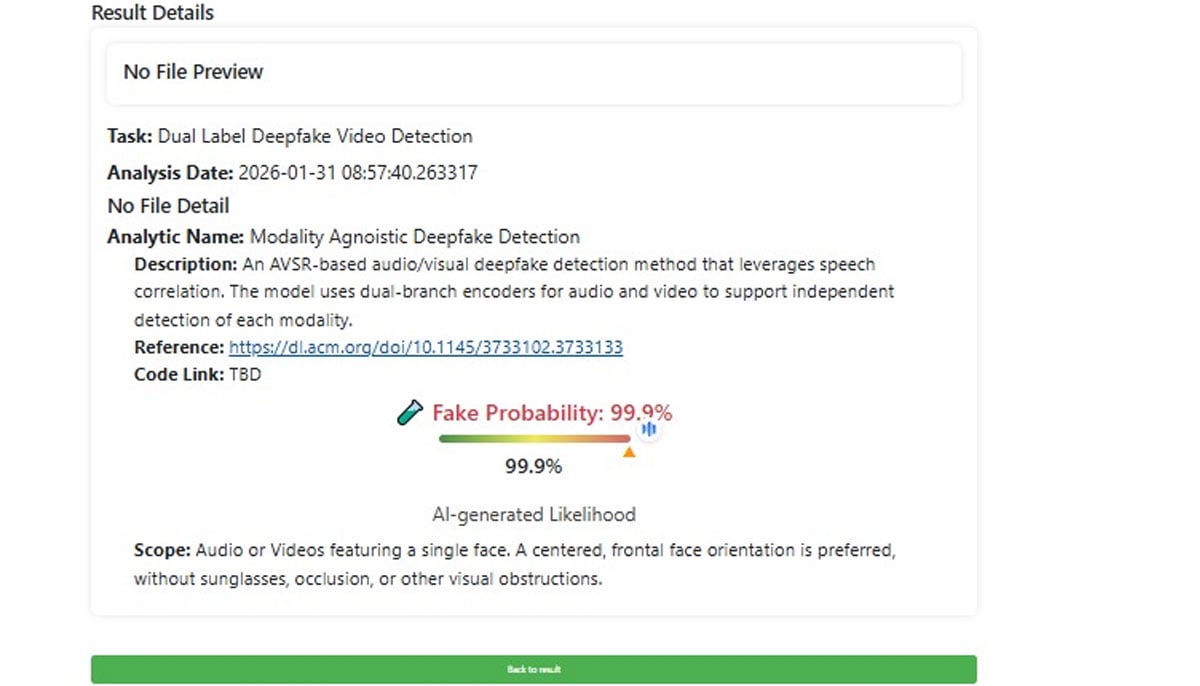

Geo Fact Check also analysed the footage using Hive Moderation, an AI content detection platform, which assigned an aggregated score of 97.3%, suggesting a high likelihood of AI-generated or deepfake content. Meanwhile, DeepFake-O-Meter, a tool developed by the University at Buffalo, assessed the clip as 99.9% likely to be AI-generated.

Verdict: The video is a deepfake produced using AI tools. Both the audio and visual components were fabricated to promote a misleading and sensational narrative.

Follow us on @GeoFactCheck on X (Twitter) and @geo_factcheck on Instagram. If our readers detect any errors, we encourage them to contact us at [email protected]