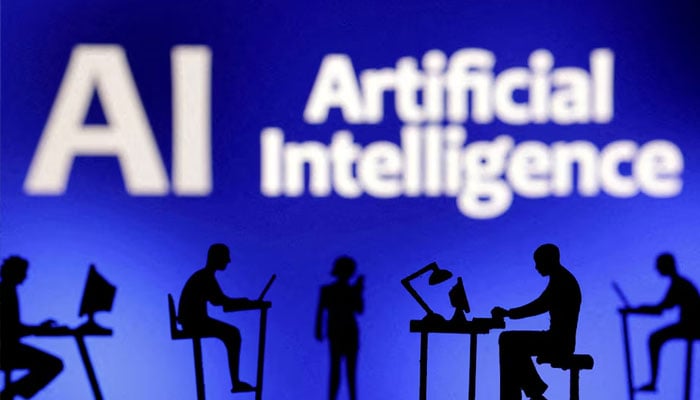

Pakistan's invisible AI workforce

Pakistan's cognitive labour is being harvested at discount to feed global AI systems

July 09, 2025

In a modest Lahore flat at 2am, he opens his laptop to a queue of grim images: a child's bruised face, a burning car, shadowed crowds. His task is to tag each "child", "violence", "protest", before the next one appears.

He earns Rs350 per hour ($1.50), just above the legal minimum wage of Rs178 per hour, based on Pakistan's Rs37,000 monthly minimum. There are no breaks, no contracts, no mental health support. When nausea hits, he vomits, then moves to the next image.

This is silent suffering. What he is doing, data annotation, is essential work for training platforms like ChatGPT, Google classifiers and Microsoft’s autopilot systems. Globally, the data-labelling industry is projected to reach $17 billion by 2030. Firms such as Sama.ai, Appen, Clickworker, Toloka and Amazon Mechanical Turk channel tasks to Pakistan via subcontractors because they can pay local annotators $1–3 per hour, while the same work pays $40–60 per hour in the Global North.

In Kenya, an investigation revealed that OpenAI contractors labelling child abuse and extremist content made under $2 per hour, causing trauma and PTSD. Pakistani workers endure the same emotional toll, without acknowledgement, compensation or support. The economic disparity is staggering.

He earns Rs350 per hour, nearly double the legal minimum, yet still a fraction of what others earn for less emotionally gruelling work. A tech startup employee might earn Rs1,500–2,000per hour, up to six times more.

Pakistan's cognitive labour is being harvested at a discount to feed global AI systems. The subcontracting system is opaque. Universities promote their "AI labs" as innovation hubs, operating under undisclosed MOUs with foreign firms.

These partnerships never appear on transcripts or CVs and the Higher Education Commission has issued no public guidance or ethical scrutiny. The Ministry of IT & Telecom celebrates Pakistan’s $4 billion IT export figure yet remains silent on how much of that revenue is earned through underpaid, invisible labour.

Legally, these annotators are categorised as freelancers and receive no protections. Pakistan's Employment Act doesn't recognise micro-task labour, meaning there are no enforceable contracts, benefits, or right to unionise. Worse still, many annotators handle sensitive personal content, images of real people, often tied to security or surveillance, without informed consent, a clear violation of the Personal Data Protection Act 2023.

He is not alone. A Wired investigation found that underage workers in Pakistan falsify CNICs to join annotation platforms. Among a sample of 100 annotators, 20% were minors, some working graphic queues involving war footage, dismemberment and abuse. These violations are not rare; they are quietly normalised.

The psychological impact is undeniable. Studies from Kenya document insomnia, trauma, family breakdowns and suicidal thoughts among moderators. Pakistani workers describe similar symptoms, emotional detachment, anxiety and mental fatigue, but receive no support. They are simply told to work faster.

This is digital colonialism. The rise of Pakistan's AI gig economy is a direct consequence of structural neglect. For decades, Pakistan has glorified "freelancing" as the great equaliser, pushing unemployed graduates into digital labour without building domestic tech infrastructure, labour protections or intellectual property pipelines. The result is a country that exports brilliance but imports value. Pakistan’s digital economy thrives not on innovation but on invisibility.

The state never asked who owns the models our students are training. It never questioned the long-term implications of funnelling thousands into labour that demands intelligence but offers no ownership, growth or credit. While India built a protective tech-industrial ecosystem around its engineers, Pakistan opened the gates for foreign platforms to extract work with no questions asked.

We are producing the next generation of machine intelligence, yet we remain locked in a colonial posture, with raw minds in and finished products out. Worse, this model is being celebrated by policymakers who count every gig payment as a win, ignoring the ethical and economic stagnation it causes.

The freelancers powering global AI are not being trained to build companies, assert their rights or lead innovation; they are being trained to be silent, compliant and grateful. Universities reward participation with vague certificates. Ministries award contracts to platforms that monetise trauma. And the result is a cognitive labour market that looks eerily like a plantation: high output, low cost, zero mobility. When AI companies pitch fairness and bias mitigation in their models, few ask if that fairness extends to the people who annotated the data.

When Pakistanis speak of digital transformation, no one asks who owns the transformation, or whether it includes the young man alone in his room at 2am, tagging graphic violence for $1.50 an hour.

This is less technological progress than it is consent-less participation in a machine economy that eats minds and calls it growth. Until we define what kind of digital economy we want, we will continue supplying our most precious resource, intelligence, without conditions.

And by the time we ask for justice, the machines may already have learned enough not to need us. Where imperial powers once extracted cotton, rubber and minerals, they now strip cognition. Pakistan's brightest minds are being leased by the hour to train machines they will never own, for companies they will never meet, to power technologies they will never afford. And the state remains complicit.

If Pakistan is to avoid being remembered as the ghostwriter of someone else’s AI empire, change must begin now. Digital labour must be defined and protected under Pakistan’s employment laws. Data annotation must be recognised as formal work with enforceable rights. Pay should begin at a living wage, no less than Rs500 per hour and scale with skill and complexity.

The HEC must audit all foreign-facing AI labs on university campuses, publish all MOUs and ensure ethical oversight. Mental health support, mandatory content warnings and maximum exposure limits should be required by law, especially for content moderation queues. Underage entry to annotation platforms must be proactively banned using CNIC-based verification systems, and violators must be held accountable. Finally, Pakistan’s IT export growth must be tied to fair labour practices, and platforms and subcontractors should not be permitted to extract value without audits confirming wages and worker protections.

None of this requires a revolution. It just needs political will. A federal ministry could act by executive order. The courts could admit a public interest petition. Universities could freeze questionable partnerships until their students are no longer being used as silent inputs in someone else’s algorithm.

There is still time to reverse course. But if nothing changes, Pakistan will not be known for its innovation, but as the country that exported its intelligence for free. The AI revolution may be automated, but behind every line of training data is a young man or woman clicking in the dark. And someone, somewhere, is profiting from their silence.

The writer is the director of the Centre for Law, Justice & Policy (CLJP) at Denning Law School. He holds an LLM in Negotiation and Dispute Resolution from Washington University.

Disclaimer: The viewpoints expressed in this piece are the writer's own and don't necessarily reflect Geo.tv's editorial policy.

Originally published in The News