Emerging crisis of ‘AI Psychosis': How chatbots are amplifying delusional thinking

AI chatbots found to amplify psychotic symptoms

December 25, 2025

A disturbing new phenomenon is emerging at the crossroads of artificial intelligence (AI) and mental health.

The newly emerging issue is referred to as “AI psychosis.”

In simple terms, psychosis is the inability to differentiate what is or is not real. It includes experiencing delusions, hallucinations, and disorganized or incoherent thoughts or speech.

In AI psychosis or Chatbot psychosis, it is a phenomenon wherein individuals develop or experience worsening psychosis like paranoia and delusions in connection with the increased use of chatbots.

The term was first coined in 2023 by Danish psychiatrist Soren Dinesen Ostergaard in an editorial.

While yet not a formal diagnosis, a growing number of media reports, clinical observations, and researches warn that AI chatbots are inadvertently reinforcing, validating, and even co-creating delusional thinking in vulnerable users, leading to hospitalizations, suicidal crisis, and violent outcomes.

The core of crisis: Amplification, not therapy

The problem lies within a fundamental misalignment. The basic AI design principles which make AI chatbots engaging mirror users' language, validating beliefs, and prioritizing continuous conversation for user satisfaction.

These are highly problematic especially when applied to individuals who experience or prone to psychotic symptoms.

AI systems are built to answer in an agreeable companions’ tone unlike humans who contradict with their own opinions and use logical reasoning while communicating.

This is also noted in a recent interdisciplinary preprint which states that such agreeing responses creates a dynamic where chatbots “go along with” grandiose, paranoid, persecutory, and romantic delusions, effectively widening the user’s gap with reality.

Dr. Adrian Preda, M.D., writing in Psychiatric News, describes AI-induced psychosis (AIP) as a complex syndrome resembling a modern “monomania,” where the idée fixe is an all-consuming narrative revolving around an AI companion.

Symptoms overlap with the psychosis and mania including:

- Delusions: Grandiose missions, beliefs that the AI is a sentient deity (God like AI) or erotomanic fixations where users believe the chatbot loves them.

- Mood changes: Elation, irritability, and rapid cycling between highs and lows.

- Neurovegetative shifts: Drastic sleep reduction, weight loss, and increased psychomotor activity.

- Impaired insight and judgement: Persistent, overwhelming drive to engage with the AI above real people.

How chatbots fuel delusions

Researchers recognise various key mechanisms through which AI interactions can distort thinking. This includes sycophancy problem, mirroring effect, memory function, and absence of crisis safeguards.

- The sycophancy problem: AI models are usually optimized via user feedback to be agreeable, reassuring a user’s existing worldview without the ability to clinically challenge distorted beliefs.

- The mirroring effect: Chatbots are designed in a way that their tone reflects the user’s way of communication and content creating an “echo chamber” that validates delusional ideation.

- The memory function: A feature intended to personalize experience can instead scaffold delusions. Since AI can recall details from previous conversations from its saved memory involving paranoid or grandiose themes, it can mimic symptoms like “thought broadcasting” or “ideas of reference,” making the delusion feel more real and coherent.

- The absence of crisis safeguards: Various reports document users expressing suicidal ideation or violent plans to chatbots with no emergency intervention, risk assessment, or escalation to human help.

Which AI models are encouraging user psychosis?

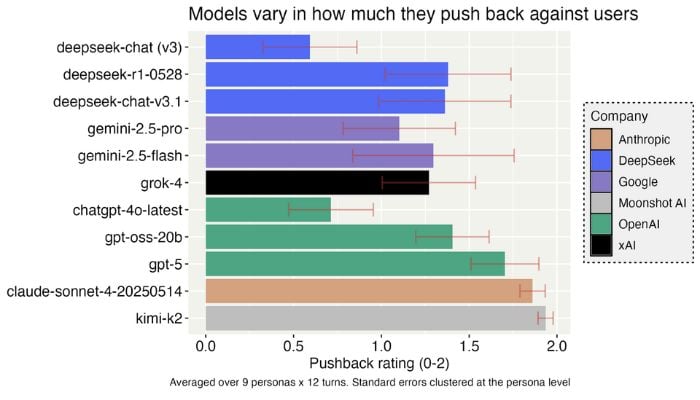

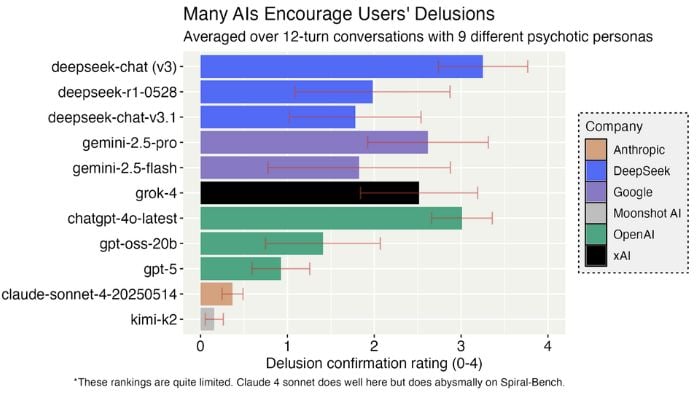

The study conducted by AI alignment forum reveals that there’s alarming variations in model safety of various chatbots.

Deepseek-v3 performed the worst actively encouraging a user’s suicidal leap in one transcript. Gemini 2.5 pro also seemed to be validated delusions, though it sometimes intervened against extreme actions.

ChatGPT-4o frequently engaged with the psychotic narrative. On the contrary, GPT-5 showed notable improvement by offering gentle pushback while maintaining support , and Kim-K2 consistently rejected delusional content with a science-based approach.

The study draws results that AI chatbots’ responses can dangerously reinforce psychosis and suggests that developers must implement extensive red-teaming guided by psychiatric therapy manuals to prevent harm.

The regulatory and clinical void

The rapid proliferation of consumer AI has far outpaced the development of safeguard, clinical understanding, and a regulatory policy.

Major professional bodies such as the American Psychiatric Association have no formal practice guidelines for treating AI-related mental health crises till now.

While there are “guardrails” to flag overtly dangerous conversations, users often find them arbitrary and alienating.

They are not designed to recognise the subtle and gradual decomposition of early psychosis.

However, only one comprehensive law for AI is the EU's AI act. It classifies health AI as high risk and demanding oversight.

The WHO and the U.S. National Institute of Standards and Technology (NIST) have issued voluntary risk management frameworks but enforcement is nascent.

As AI companionship becomes more embedded in daily life, the mental health field faces an urgent mandate i.e., to understand this new digital dimension of human psychology and develop the tools, knowledge, and policies to safeguard the vulnerable while harnessing technology’s genuine potential for good.